This section describe how the the relevance level has been assigned for each evaluation attributes in the EVA tool.

**Expert opinion elicitation process**

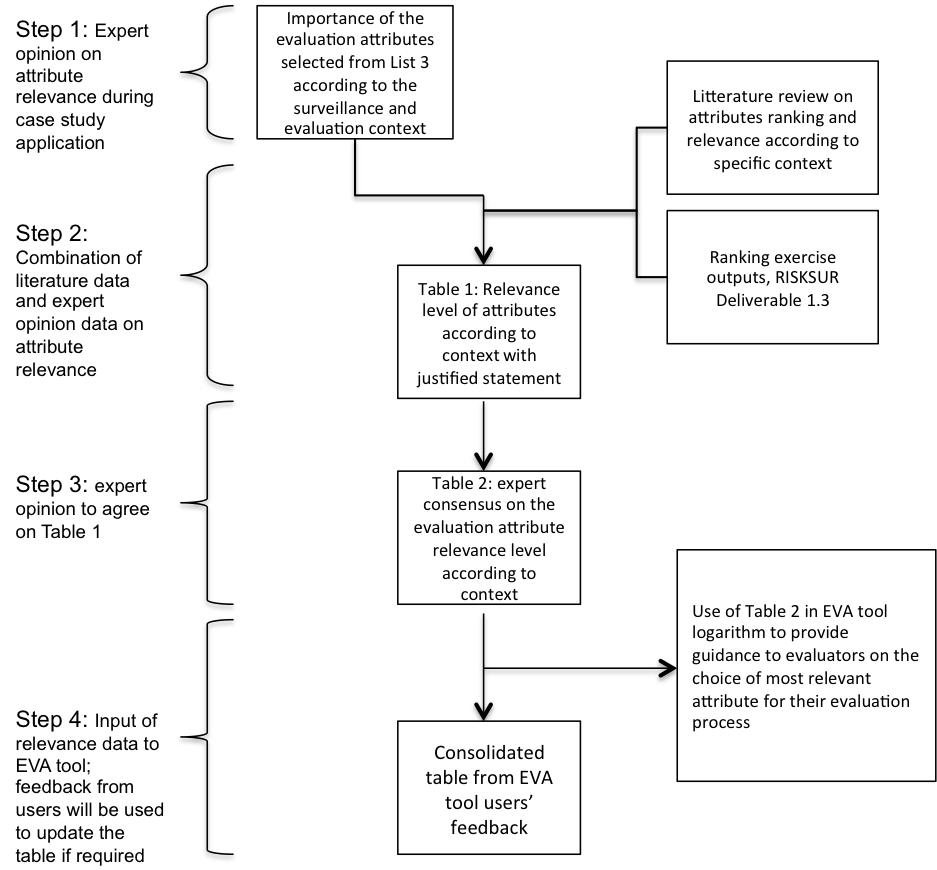

Evaluation attributes relevance was reviewed during case study application following an expert opinion elicitation process (Figure 1).

**Attribute relevance**

The relevance was mainly dependent on the surveillance objective (e.g. early detection; freedom from disease; case finding…) and in some situations on the surveillance design itself (e.g. risk-based surveillance) (Table of relevance: attribute_relevance_table_final.pdf).

The relevance will ultimately be determined by the user, but we aimed to provide some basic guidance.

Information from the case study and literature review was compiled to produce a matrix table with a proposed level of relevance for each attribute according to each surveillance objectives. A justification was provided to justify the choice of relevance level. This table was sent for review to all the experts involved in the process for validation. If the experts consulted did not all agree on the level of relevance and statement provided, we identified different degree of agreement (Agreement, Moderate disagreements, Disagreement, Strong disagreements, poor understanding).

The attributes for which experts did not reach consensus (strong disagreement and disagreement) were discussed during a meeting in Paris (July 23, 2015) to reach consensus.

Figure 1. Expert opinion process to define and agree on the level of relevance of evaluation attributes according to the surveillance and evaluation contexts